Top Kubernetes Tools in 2026

-

By Akssar

By Akssar

- Published on Jul 19 2022

Top Kubernetes Tools in 2026

Both Kubernetes Tools and cloud-native technology have been making steady progress as of late.

The most recent survey report from the CNCF indicates that the percentage of companies using Kubernetes for production purposes has climbed to 83 percent, up from 78 percent the previous year.

The typical manufacturing method involves the use of several containers.

The job that developers and operators do is made easier by Kubernetes Tools, which in turn increases agility and speeds up the delivery of software.

Kubernetes Tools has been a favorite among developers for several years, but it is only now making its way slowly into production systems and is well on its way to becoming a mainstream component of the information technology industry.

Why Is Kubernetes Monitoring Important?

Under the hood, Kubernetes Tools hides a great deal of functionality behind an abstraction layer.

Monitoring each component of the system (physical nodes, pods, containers, proxies, schedulers, and so on) is a large effort that involves the interdependencies of various components in a significant number of ways.

In a high-traffic production setting, it might be challenging to effectively manage a Kubernetes Tools cluster if the administrator does not implement an appropriate monitoring approach.

One of the most important aspects to keep an eye on is the node resources, which may include the CPU, RAM, network latency, or disk I/O.

Monitoring the physical Kubernetes Tools infrastructure is also essential. Another location is known as the Pods (for example, the number of currently running Pods against the configured value or the number of Pods per Node Group, and so on).

Containers come with their metrics, which may be retrieved by issuing the command "docker stats."

At long last, apps that are executing inside of the containers will have their metrics.

Continuously monitoring metrics from each tier of the cluster will not only help you identify the optimal configurations for each component but will also help you discover new configurations in Kubernetes monitoring tools.

In addition to tracking metrics, a good monitoring system should also be able to detect and report on anomalies and other occurrences that are not typical for the cluster, and it should do so in real-time.

Because of this, the team can analyze the underlying problem proactively and deliver a solution promptly with Kubernetes security tools.

In addition to these advantages, proactive monitoring also provides an application use history and trend analysis.

For instance, if the Kubernetes monitoring tools history reveals that the cluster only generates new nodes for pods on the weekends, you have the option to study this further best Kubernetes tools.

There may be surges in the number of client connections that are made to the load balancer at that time.

With such information at your disposal, you will be able to add some more headroom to the cluster to make certain that Nodes are easily accessible for Pods.

The vast majority of monitoring solutions that are now available on the market will provide some level of insight into Kubernetes Tools.

The Worsening Scenario for Large-Scale Businesses

Some enterprise businesses are going through growing pains because of a lack of expertise, complex deployments, and challenges in integrating new and existing systems and deployments.

This is happening as enterprises are accelerating their digital transformation and embracing the Kubernetes Tools ecosystem.

In the most recent poll on the state of Kubernetes, which was carried out by VMware and titled "State of Kubernetes 2021," approximately 96 percent of respondents reported having trouble choosing a Kubernetes distribution.

The lack of internal experience and knowledge continues to be the most difficult obstacle to overcome when deciding (55 percent), although this percentage has decreased by 14 percent since last year, signaling substantial development.

Other significant obstacles were the difficulty in hiring necessary skills (cited by 37% of respondents), the rapid pace of change brought on by Kubernetes Tools and cloud-native (32%), and an excessive number of options from which to choose (30 percent).

As more individuals get acquainted with the ecosystem and it continues to develop, it is expected that the majority of these difficulties will resolve themselves on their own.

In this article, we will focus on the most difficult aspect of selecting the appropriate tool as Kubernetes Tools.

Based on reviews, votes, and comments made on social media platforms, we have compiled the following list of the most effective and widely used tools:

Table of Contents

1) Sematext

Sematext Monitoring is a monitoring system that captures metrics and events in real-time. It can be used for monitoring conventional applications as well as microservice-based applications that are hosted on Kubernetes.

After doing so, you will be able to organize, view, and analyze this data, as well as perform other operations on it.

Sematext Monitoring is a component of Sematext Cloud, which is a cloud monitoring solution that handles Kubernetes monitoring and logging so that you don't have to worry about hosting any storage or monitoring infrastructure on your own.

You can generate log analytics reports and customize monitoring dashboards Kubernetes orchestration tools using Sematext.

Additionally, you can set up alerts on metrics as well as logs. When compared to utilizing standard monitoring or command-line tools, this makes identifying faulty pods simpler and much more expedient.

You will be alerted whenever an alert is triggered as Kubernetes orchestration tools. Notifications may be sent to you through email, Slack, or any other notification hook that you want.

Installing little more than the Sematext Agent is sufficient. It may be obtained in the form of a Helm chart, DaemonSet, or Kubernetes Operator.

Setup instructions for each can be essentially copied and pasted, making it very simple to get everything up and running.

You don't need to install anything extra since the Sematext Discovery feature enables you to continually find and monitor your containerized applications.

In addition, you can configure your containerized apps' performance monitoring and log monitoring straight from the user interface.

Discovery will search for services that you are monitoring when your containerized applications scale up and down or move between containers and hosts.

This will guarantee that containerized apps are immediately monitored as soon as they come online.

There is also an integration for Kubernetes Audit available, which will save you time and provide you with a variety of pre-built reports, charts, and alarms.

2) Kubernetes Dashboard

The Kubernetes Dashboard is a user interface (UI) add-on that is web-based and designed for Kubernetes clusters.

It makes managing, troubleshooting, and monitoring your environment straightforward to do.

You may use the Kubernetes Dashboard Kubernetes deployment tools to monitor the health of workloads and to see basic data relating to memory and CPU use statistics across all of your nodes (pods, deployments, replica sets, cron jobs, etc.) Using YAML files that are already formatted for usage, the Kubernetes Dashboard may be quickly and simply installed.

3) Prometheus

The Prometheus project is open for business. In contrast to the majority of other monitoring tools, it does not have a "free tier" that places limitations on what you can monitor and the amount of data that may be produced by your systems.

It has a vibrant community that can respond to your inquiries about Prometheus or its use in the Kubernetes logging tools (or has already done so), so don't hesitate to ask!

Out of the box, you have access to the query language known as PromQL. This allows you to search and get metric data from the time-series database at your convenience (TSDB).

A pull mechanism is used by Prometheus. It is distinct from conventional monitoring solutions in that it does not need the installation of agents, which might place an extra burden on the systems being monitored.

Configuring Prometheus to do service discovery in a network is a straightforward process. Prometheus has the capability of finding endpoints to scrape for metrics thanks to service discovery.

When you have many best Kubernetes monitoring tools on your network and you don't want to manually add each environment, this might be an effective solution for you.

4) Grafana

Monitoring, metrics, data visualization, and analysis can all be performed with the help of Grafana, an open-source tool.

When compared to other visualization tools, Grafana stands out due to the extensive number of databases with which it can communicate.

Grafana is often installed on top of Prometheus when it is being used to monitor Kubernetes; however, it is also frequently used in conjunction with Graphite or InfluxDB in Kubernetes logging tools.

You can construct complete monitoring dashboards using a broad range of graphs, including heatmaps, line graphs, bar graphs, histograms, and geo maps.

In addition to that, there are currently a great many Kubernetes monitoring dashboards that are available for usage.

In addition, Grafana comes with its built-in notification system, as well as tools for filtering, annotations, data-source specific querying, authentication and authorization, cross-organizational collaboration, and a great deal more besides.

Installing and using Grafana is a simple process. It has a large following in the Kubernetes community, and some deployment configuration files will automatically include a Grafana container in the stack.

5) Jaeger

An implementation of a Kubernetes Operator known as the Jaeger Operator can be found.

The use of an operator, which is a piece of software, reduces the complexity of the operational tasks required to operate another piece of software.

To put it another way, Operators are a technique for packaging, deploying, and managing applications using Kubernetes.

An application is considered to be managed using the Kubernetes load testing tool when it is both deployed on Kubernetes and managed with the Kubernetes APIs and the kubectl (Kubernetes) or oc (OKD) tools.

To get the most out of Kubernetes, you will need a collection of APIs that are coherent and can be extended.

This will allow you to better support and manage the applications that operate on Kubernetes. On Kubernetes, Operators may be thought of as the runtime that controls this category of application.

Monitoring and troubleshooting in complicated distributed systems, such as Kubernetes development tools, may be accomplished with the help of the free tracing tool known as Jaeger.

It was made available to the public by Uber Technologies in 2016 and was open-sourced.

Users can do root cause analysis, monitor distributed transactions, propagate distributed contexts, analyze service dependencies, and optimize performance and latency while using Jaeger.

Open Telemetry-based support is provided by Jaeger for the programming languages Java, Node, Python, Go, and C++, as well as for a variety of data sources such as Cassandra, Elasticsearch, Kafka, and RAM.

Learn more about how to utilize Jaeger as a distributed tracing system by reading the related reading material.

You have the option of using the Jaeger Operator or a DaemonSet configuration when you want to deploy the Jaeger Kubernetes load testing tool.

6) Elastic Stack (ELK)

Even for Kubernetes, the ELK stack is one of the most widely used open-source log management systems available.

However, it may be readily utilized for monitoring reasons, and in fact, many people do use it in this capacity.

It is a set of four tools that assures a pipeline for logging that goes from beginning to finish. You may store Kubernetes logs in Elasticsearch, which is a full-text search and analytics engine.

Logstash is a log aggregator that stores, processes, and forwards captured logs to Elasticsearch for indexing. Kibana has both reporting and visualization capabilities in its package.

And lastly, Elasticsearch can receive logs and metrics thanks to something called Beats, which are lightweight data shippers.

The Kubernetes and Docker monitoring beats that include auto-discovery are included with ELK by default.

You will be able to monitor performance at both the application and the system level with the assistance of Beats, which gathers logs, metrics, and information from Kubernetes and Docker.

7) cAdvisor

Container Advisor, more formally known as cAdvisor, is a tool that enables users to get insight and comprehension into the resource use and performance characteristics of containers that are operating.

It is a daemon that is always operating in the background that gathers, aggregates, processes, and outputs information on containers that are currently active.

For each active container, it maintains resource isolation settings, historical resource utilization, histograms of comprehensive historical resource usage, and network data. These details are sent out through an export on both the container and the machine levels.

cAdvisor performs metrics analysis for memory, CPU, file, and network utilization across all containers that are operating on a certain node.

On the other hand, it does not save this information permanently, which means that you will need a separate monitoring tool.

There is no specific procedure that must be followed to install cAdvisor since it has already been included in the kubelet binary.

You are the only one who has to answer this question: what metrics do you wish to measure? Take a look at the whole list here to get an idea of how many different metrics cAdvisor gathers.

But some crucial indicators are very essential to watch regardless of what it is that you are constructing. Take a look at some of them in the following example.

8) Kube-state-metrics

Kube-state-metrics is a listening service that creates metrics on the state of Kubernetes objects by utilizing the Kubernetes API.

This service places more of an emphasis on the health of the objects themselves as opposed to the health of the components.

This reveals valuable information on the statuses of nodes, as well as the availability of deployment replicas, pod lifecycle statuses, certificate signing request statuses, and more.

The Kubernetes API server makes available information on the number of pods, nodes, and other Kubernetes objects, as well as their states of health and availability.

It is made much simpler to consume these metrics by the Kube-state-metrics add-on, which also assists in bringing to light problems with the infrastructure of the cluster, resource limits, or pod scheduling.

How does Kube-state-metrics work? It creates metrics about the status of Kubernetes objects while simultaneously listening to the Kubernetes API.

These include the current condition of each node, the capacity of each node in terms of things like CPU and memory, the number of desired/available/unavailable/updated replicas for each Deployment, and the various pod statuses, such as waiting, running, ready, and so on.

After kube-state-metrics has been installed on your cluster, it will provide a wide variety of metrics in text format and make them available through an HTTP endpoint.

Any monitoring system that can gather Prometheus metrics can readily ingest these data without any difficulty.

9) Datadog

An application performance management (APM) solution, Datadog gives you the ability to retrieve logs, metrics, events, and service statuses in real-time from Kubernetes.

Monitoring, troubleshooting, and improving application performance are all made possible with its help.

Dashboards, high-resolution metrics and events, and the ability to manipulate and visualize this data are all included in Datadog.

In addition, you can configure notifications and alarms to be sent to a variety of channels, such as Slack and PagerDuty.

Installing the Datadog Agent is a simple process. You may do so by using a DaemonSet, which will then be distributed to each node in the cluster.

After the Datadog Agent has been properly installed, data about resource metrics and events will begin to be imported into Datadog.

You can examine the information by using the built-in Kubernetes dashboard that is provided by Datadog.

Datadog provides its customers with a wide range of application monitoring capabilities, which enable them to search, filter, and examine logs in a short amount of time for troubleshooting and open-ended exploration of data, thereby improving the performance of the application, the platform, and the service.

Datadog can gather data and events across the whole DevOps stack in a seamless manner thanks to its more than 350 vendor-supported connections (including more than 75 interfaces with AWS services).

On a single platform, Datadog offers end-to-end insight into both on-premises and cloud settings. While it remains a leading choice, exploring datadog and its alternatives can help identify other solutions that might better align with specific budgets, integration needs, or feature preferences.

The Datadog platform makes it possible for engineering teams to investigate application performance issues, regardless of the size of the cluster or the number of hosts that are affected.

Customers are also given the ability to communicate SLA/SLO adherence and key performance indicators (KPIs) across engineering teams, executives, and external stakeholders thanks to this solution.

Datadog is an AWS Advanced Technology Partner and has the AWS Migration, Microsoft Workloads, DevOps, and Containers Competencies.

In addition, Datadog is an AWS Advanced Technology Partner.

10) New Relic

You can get an overall picture of your servers, hosts, apps, and services with the help of the monitoring tool New Relic, which integrates Kubernetes.

You may collect data and information for nodes, pods, containers, deployments, replica sets, and namespaces.

Additionally, it has sophisticated searching capabilities, in addition to tag-driven alerting and dashboarding capabilities.

A cluster explorer that gives a representation of a Kubernetes cluster in several dimensions is given to you as part of this package.

Explore the data and metadata of Kubernetes using dashboards that have already been made. Teams may more rapidly diagnose failures, bottlenecks, and other odd behavior across their Kubernetes systems by using cluster explorer.

The New Relic Kubernetes integration will monitor and keep track of the total amount of memory and core utilization that is occurring across all of your cluster's nodes.

This enables you to satisfy the criteria for the resources needed for the application to run at its best.

11) Sensu

Sensu provides a full-stack observability pipeline that enables users to gather, filter, and convert monitoring events before sending them to a database of their choice.

To get the most out of both of these technologies, Sensu and Prometheus may be operated in your Kubernetes cluster in parallel with one another.

You may also run it without the need for Prometheus if you want to.

However, optimal performance can be achieved by deploying Sensu as a DaemonSet in conjunction with Prometheus.

This is because sending application-level metrics to Prometheus requires loading the Prometheus Software Development Kit (SDK) into your application codebase and making a metrics endpoint available. After that, the endpoint is scraped, and the information is saved on the Prometheus Server.

This may be a very tiring process. By using a concept known as a sidecar, Sensu circumvents this complication.

Alongside the installation of your application, a Sensu agent will also take place. Metrics are continuously collected by the agent and exposed to the Prometheus server, all without the need for you to make any changes to the application source.

You will get system metrics, such as the amount of time spent using the CPU, RAM, and disk space, in addition to bespoke application metrics and logs, which you will then be able to export to a database of your choice.

12) Popeye

A program known as Popeye examines live Kubernetes clusters for the presence of possible problems with the resources and settings that have been installed.

It cleans up your cluster based on what's running on nodes, rather than what's stored on disk.

It does this by scanning your cluster, which reveals any misconfigurations and assists you in ensuring that best practices are being followed, so eliminating any more difficulties in the future.

Its goal is to alleviate the mental strain that occurs as a result of managing a Kubernetes cluster in a production environment.

In addition, if your cluster makes use of a metric-server, it will report any possible over- or under-allocation of resources and will try to alert you if your cluster reaches its maximum capacity.

Popeye is a read-only tool, which means that it does not modify any of the Kubernetes resources that you have.

Your cluster is analyzed by Popeye to identify best practices and possible problems. At the moment, Popeye only inspects nodes, pods, namespaces, and services. There is always more to come!

We have high hopes that the Kubernetes community will collaborate with us to make Popeye even better.

The purpose of the sanitizers is to identify incorrect setups, such as port mismatches, dead or unused resources, metrics usage, probes, container images, RBAC rules, naked resources, and so on.

Which Monitoring Tool Will You Use to Keep an Eye on Your Kubernetes Cluster?

Your monitoring requirements and use case should guide your selection of technology. When you initially enter the Kubernetes ecosystem, you will most likely always run across CNCF projects, and you will most likely always attempt those projects first.

This is because you will most likely always run into CNCF projects.

If you have the necessary resources, you may install and operate such tools on your cluster and even administer them on your own.

They have the benefit of being backed up by huge, active communities that are determined to enhance current solutions, which increases the likelihood that you will obtain assistance if you need it.

SaaS software, on the other hand, is supported by a team of professionals who are available to answer questions, and more crucially, it eliminates the burden of personally maintaining the tools required to monitor Kubernetes.

It aids in managing the complexity of the situation so that you can concentrate on developing your product and adding value.

When it comes to selecting a monitoring system, our comprehensive guide on alerting and monitoring has a checklist that you may consult if you need assistance in deciding. Sematext meets all of the requirements.

Conclusion

This list of top Kubernetes tools is designed to help you maximize your efforts in the cloud. The world has moved to the cloud and it is here to stay.

This has opened up a number of lucrative career paths on the cloud platform. Taking a training course from a reputed and authorized training partner like Sprintzeal can go a long way in establishing a lucrative career in cloud computing.

Check out our AWS Certification Training Solution Architect, and our AWS SysOps Certification Training Course

Our training programs are designed in line with industry standards by subject matter experts. The training is imparted by our trainers with over 10 years of experience.

Join us and start a lucrative career in Cloud Computing today!

Read these articles to learn more-

Fundamentals Of Cloud Computing

Future Of Cloud Computing

Impact Of AWS Certification On Cloud Computing Jobs

Popular Programs

AWS Certified Solution Architect Professional

Live Virtual Training

- 4.5 (300 + Ratings)

- 14k + Learners

AWS Certified DevOps Engineer Certification Training

Live Virtual Training

- 4.8 (400 + Ratings)

- 54k + Learners

Microsoft Azure Administrator Associate AZ-104

Live Virtual Training

- 4.2 (560 + Ratings)

- 7k + Learners

Microsoft Azure Infrastructure Solutions (AZ-305)

Live Virtual Training

- 4.2 (560 + Ratings)

- 12k + Learners

Trending Posts

Trends Shaping the Future of Cloud Computing

Last updated on Jun 4 2024

What Is Microsoft Azure? A Complete Cloud Computing Guide for 2026

Last updated on Dec 18 2025

The Impact of Internet of things on Marketing

Last updated on Mar 30 2023

Azure Architecture - Detailed Explanation

Last updated on Oct 7 2024

Public Cloud Security Checklist for Enterprises

Last updated on May 19 2025

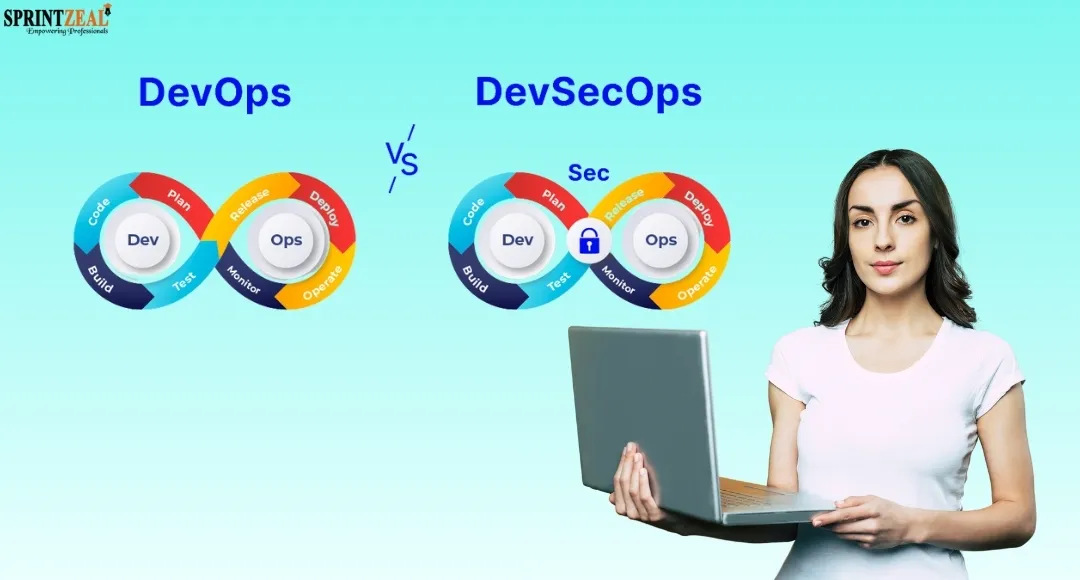

DevOps vs DevSecOps: Benefits, Challenges, and Comparison

Last updated on Jan 21 2026

Categories

- Other 83

- Agile Management 49

- Cloud Computing 58

- Project Management 175

- Data Science 71

- Business Management 89

- Digital Marketing 88

- IT Service Management 36

- Programming Language 61

- AI and Machine Learning 94

- IT Security 113

- Quality Management 78

- IT Hardware and Networking 28

- Microsoft Program 5

- Workplace Skill Building 15

- Risk Management 10

- Information Security 8

- Leadership and Management 10

- Corporate Training and Development 1

Trending Now

Azure Vs Aws - Which Technology Is Better

ebookThe Impact of Internet of things on Marketing

ebookAWS Lambda - An Essential Guide for Beginners

ebookCareer in Cloud Computing or Cyber Security

ebookImpact of AWS Certification On Cloud Computing Jobs

ebookAmazon Certifications: List of Top AWS certifications in 2026

ebookAWS Interview Questions and Answers 2026

ebookAmazon Software Development Manager Interview Questions and Answers 2026

ebookAWS Architect Interview Questions - Best of 2026

ebookHow to Become a Cloud Architect - Career, Demand and Certifications

ebookWhat is Cloud Computing? - Fundamentals of Cloud Computing

ebookAWS Solutions Architect Salary in 2026

ebookAmazon EC2 - Introduction, Types, Cost and Features

ebookAWS Opsworks - An Overview

ebookAzure Pipeline Creation and Maintenance

ebookCI CD Tools List - Best of 2026

ebookTrends Shaping the Future of Cloud Computing

ebookContinuous Deployment Explained

ebookDevOps Career Path – A Comprehensive Guide for 2026

ebookBenefits of Cloud Computing in 2026

ebookJenkins Interview Questions and Answers (UPDATED 2026)

ArticleA Step-by-Step Guide to Git

ArticleScalability in Cloud Computing Explained

ebookIoT Security Challenges and Best Practices-An Overview

ebookHow to Learn Cloud Computing in 2026 - A Brief Guide

ArticleCloud Engineer Roles and Responsibilities: A complete Guide

ebookTypes of Cloud Computing Explained

ArticleCloud Engineer Salary - For Freshers and Experienced in 2026

ArticleEssential Cybersecurity Concepts for beginners

ebookWhat is a Cloud Service - A Beginner's Guide

ebookTop 3 Cloud Computing Service Models: SaaS | PaaS | IaaS

ArticleWhat is Private Cloud? - Definition, Types, Examples, and Best Practices

ebookWhat Is Public Cloud? Everything You Need to Know About it

ArticleTop 15 Private Cloud Providers Dominating 2026

ebookWhat Is a Hybrid Cloud? - A Comprehensive Guide

ebookCloud Computing and Fog Computing - Key Differences and Advantages

ebookAzure Architecture - Detailed Explanation

ArticleMost Popular Applications of Cloud Computing – Some Will Shock You

ArticleTips and Best Practices for Data Breaches in Cloud Computing

ArticleWhat Is Edge Computing? Types, Applications, and the Future

ArticleMust-Have AWS Certifications for Developers in 2026

ArticleSalesforce Customer Relationship Management and its Solutions

ArticleCutting-Edge Technology of Google Cloud

ArticleSpotify Cloud: Powering Music Streaming Worldwide

ArticlePublic Cloud Security Checklist for Enterprises

Article12 Best Managed WordPress Hosting Services in 2026

ArticleLatest Azure Interview Questions for 2026

ArticleTop Coding Interview Questions in 2026

ArticleLatest Cloud Computing Interview Questions 2026

ArticleSafe file sharing for teams: simple rules that work

ArticleMy learning path to become an AWS Solutions Architect

ArticleClient Server Model—Everything You Should Know About

ArticleWhat Is Microsoft Azure? A Complete Cloud Computing Guide for 2026

ArticleDocker Tutorial for Beginners: Containers, Images & Compose

ArticleGit Merge vs Rebase: Differences, Pros, Cons, and When to Use Each

ArticleThe Invisible Infrastructure Powering Tomorrow’s Apps

ArticleDevOps vs DevSecOps: Benefits, Challenges, and Comparison

Article

.png)